Deployments

TOC

Understanding Deployments

Refer to the official Kubernetes documentation: Deployments

Deployment is a Kubernetes higher-level workload resource used to declaratively manage and update Pod replicas for your applications. It provides a robust and flexible way to define how your application should run, including how many replicas to maintain and how to safely perform rolling updates.

A Deployment is an object in the Kubernetes API that manages Pods and ReplicaSets. When you create a Deployment, Kubernetes automatically creates a ReplicaSet, which is then responsible for maintaining the specified number of Pod replicas.

By using Deployments, you can:

- Declarative Management: Define the desired state of your application, and Kubernetes automatically ensures the cluster's actual state matches the desired state.

- Version Control and Rollback: Track each revision of a Deployment and easily roll back to a previous stable version if issues arise.

- Zero-Downtime Updates: Gradually update your application using a rolling update strategy without service interruption.

- Self-Healing: Deployments automatically replace Pod instances if they crash, are terminated, or are removed from a node, ensuring the specified number of Pods are always available.

How it works:

- You define the desired state of your application through a Deployment (e.g., which image to use, how many replicas to run).

- The Deployment creates a ReplicaSet to ensure the specified number of Pods are running.

- The ReplicaSet creates and manages the actual Pod instances.

- When you update a Deployment (e.g., change the image version), the Deployment creates a new ReplicaSet and gradually replaces the old Pods with new ones according to the predefined rolling update strategy until all new Pods are running, then it removes the old ReplicaSet.

Creating Deployments

Creating a Deployment by using CLI

Prerequisites

- Ensure you have

kubectlconfigured and connected to your cluster.

YAML file example

Creating a Deployment via YAML

Creating a Deployment by using web console

Prerequisites

Obtain the image address. The source of the images can be from the image repository integrated by the platform administrator through the toolchain or from third-party platforms' image repositories.

-

For the former, the Administrator typically assigns the image repository to your project, and you can use the images within it. If the required image repository is not found, please contact the Administrator for allocation.

-

If it is a third-party platform's image repository, ensure that images can be pulled directly from it in the current cluster.

-

If the image registry requires authentication, you need to configure the corresponding image pull secret. For more information, see Add ImagePullSecrets to ServiceAccount.

Procedure - Configure Basic Info

-

Container Platform, navigate to Workloads > Deployments in the left sidebar.

-

Click on Create Deployment.

-

Select or Input an image, and click Confirm.

Note: When using images from the image repository integrated into web console, you can filter images by Already Integrated. The Integration Project Name, for example, images (docker-registry-projectname), which includes the project name projectname in this web console and the project name containers in the image repository.

-

In the Basic Info section, configure declarative parameters for Deployment workloads:

Parameters Description Replicas Defines the desired number of Pod replicas in the Deployment (default: 1). Adjust based on workload requirements.More > Update Strategy Configures the rollingUpdatestrategy for zero-downtime deployments:

Max surge (maxSurge):- Maximum number of Pods that can exceed the desired replica count during an update.

- Accepts absolute values (e.g.,

2) or percentages (e.g.,20%). - Percentage calculation:

ceil(current_replicas × percentage). - Example: 4.1 →

5when calculated from 10 replicas.

maxUnavailable):- Maximum number of Pods that can be temporarily unavailable during an update.

- Percentage values cannot exceed

100%. - Percentage calculation:

floor(current_replicas × percentage). - Example: 4.9 →

4when calculated from 10 replicas.

1. Default values:maxSurge=1,maxUnavailable=1if not explicitly set.

2. Non-running Pods (e.g., inPending/CrashLoopBackOffstates) are considered unavailable.

3. Simultaneous constraints:-

maxSurgeandmaxUnavailablecannot both be0or0%. - If percentage values resolve to

0for both parameters, Kubernetes forcesmaxUnavailable=1to ensure update progress.

For a Deployment with 10 replicas:-

maxSurge=2→ Total Pods during update:10 + 2 = 12. maxUnavailable=3→ Minimum available Pods:10 - 3 = 7.- This ensures availability while allowing controlled rollout.

Procedure - Configure Pod

Note: In mixed-architecture clusters deploying single-architecture images, ensure proper Node Affinity Rules are configured for Pod scheduling.

-

Pod section, configure container runtime parameters and lifecycle management:

Parameters Description Volumes Mount persistent volumes to containers. Supported volume types include PVC,ConfigMap,Secret,emptyDir,hostPath, and so on. For implementation details, see Volume Mounting Guide.Pull Secret Required only when pulling images from third-party registries (via manual image URL input).

Note: Secret for authentication when pulling image from a secured registry.Close Grace Period Duration (default: 30s) allowed for a Pod to complete graceful shutdown after receiving termination signal.

- During this period, the Pod completes inflight requests and releases resources.

- Setting0forces immediate deletion (SIGKILL), which may cause request interruptions.

- Node Affinity Rules

| Parameters | Description |

|---|---|

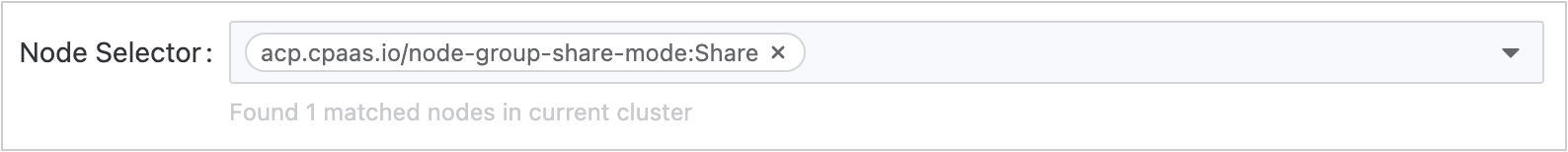

| More > Node Selector | Constrain Pods to nodes with specific labels (e.g. kubernetes.io/os: linux).  |

| More > Affinity | Define fine-grained scheduling rules based on existing. Affinity Types:

|

- Network Configuration

-

Kube-OVN

Parameters Description Bandwidth Limits Enforce QoS for Pod network traffic: - Egress rate limit: Maximum outbound traffic rate (e.g.,

10Mbps). - Ingress rate limit: Maximum inbound traffic rate.

Subnet Assign IPs from a predefined subnet pool. If unspecified, uses the namespace's default subnet. Static IP Address Bind persistent IP addresses to Pods: - Multiple Pods across Deployments can claim the same IP, but only one Pod can use it concurrently.

- Critical: Number of static IPs must ≥ Pod replica count.

- Egress rate limit: Maximum outbound traffic rate (e.g.,

-

Calico

Parameters Description Static IP Address Assign fixed IPs with strict uniqueness: - Each IP can be bound to only one Pod in the cluster.

- Critical: Static IP count must ≥ Pod replica count.

-

Procedure - Configure Containers

-

Container section, refer to the following instructions to configure the relevant information.

Parameters Description Resource Requests & Limits - Requests: Minimum CPU/memory required for container operation.

- Limits: Maximum CPU/memory allowed during container execution. For unit definitions, see Resource Units.

- Without overcommit ratio:

If namespace resource quotas exist: Container requests/limits inherit namespace defaults (modifiable).

No namespace quotas: No defaults; custom Request. - With overcommit ratio:

Requests auto-calculated asLimits / Overcommit ratio(immutable).

- Request ≤ Limit ≤ Namespace quota maximum.

- Overcommit ratio changes require pod recreation to take effect.

- Overcommit ratio disables manual request configuration.

- No namespace quotas → no container resource constraints.

Extended Resources Configure cluster-available extended resources (e.g., vGPU, pGPU). Volume Mounts Persistent storage configuration. See Storage Volume Mounting Instructions.

Operations:- Existing pod volumes: Click Add

- No pod volumes: Click Add & Mount

mountPath: Container filesystem path (e.g.,/data)subPath: Relative file/directory path within volume.

ForConfigMap/Secret: Select specific keyreadOnly: Mount as read-only (default: read-write)

Ports Expose container ports.

Example: Expose TCP port6379with nameredis.

Fields:protocol: TCP/UDPPort: Exposed port (e.g.,6379)name: DNS-compliant identifier (e.g.,redis)

Startup Commands & Arguments Override default ENTRYPOINT/CMD:

Example 1: Executetop -b

- Command:["top", "-b"]

- OR Command:["top"], Args:["-b"]

Example 2: Output$MESSAGE:/bin/sh -c "while true; do echo $(MESSAGE); sleep 10; done"

See Defining Commands.More > Environment Variables - Static values: Direct key-value pairs

- Dynamic values: Reference ConfigMap/Secret keys, pod fields (

fieldRef), resource metrics (resourceFieldRef)

More > Referenced ConfigMaps Inject entire ConfigMap/Secret as env variables. Supported Secret types: Opaque,kubernetes.io/basic-auth.More > Health Checks - Liveness Probe: Detect container health (restart if failing)

- Readiness Probe: Detect service availability (remove from endpoints if failing)

More > Log Files Configure log paths:

- Default: Collectstdout

- File patterns: e.g.,/var/log/*.log

Requirements:- Storage driver

overlay2: Supported by default devicemapper: Manually mount EmptyDir to log directory- Windows nodes: Ensure parent directory is mounted (e.g.,

c:/aforc:/a/b/c/*.log)

More > Exclude Log Files Exclude specific logs from collection (e.g., /var/log/aaa.log).More > Execute before Stopping Execute commands before container termination.

Example:echo "stop"

Note: Command execution time must be shorter than pod'sterminationGracePeriodSeconds. -

Click Add Container (upper right) OR Add Init Container.

See Init Containers. Init Container:

- Start before app containers (sequential execution).

- Release resources after completion.

- Deletion allowed when:

- Pod has >1 app container AND ≥1 init container.

- Not allowed for single-app-container pods.

-

Click Create.

Reference Information

Storage Volume Mounting instructions

| Type | Purpose |

|---|---|

| Persistent Volume Claim | Binds an existing PVC to request persistent storage. Note: Only bound PVCs (with associated PV) are selectable. Unbound PVCs will cause pod creation failures. |

| ConfigMap | Mounts full/partial ConfigMap data as files:

|

| Secret | Mounts full/partial Secret data as files:

|

| Ephemeral Volumes | Cluster-provisioned temporary volume with features:

|

| Empty Directory | Ephemeral storage sharing between containers in same pod:

|

| Host Path | Mounts host machine directory (must start with /, e.g., /volumepath). |

Heath Checks

Managing Deployments

Managing a Deployment by using CLI

Viewing a Deployment

-

Check the Deployment was created.

-

Get details of your Deployment.

Updating a Deployment

Follow the steps given below to update your Deployment:

-

Let's update the nginx Pods to use the nginx:1 .16.1 image.

or use the following command:

Alternatively, you can edit the Deployment and change

.spec.template.spec.containers[0].imagefromnginx:1.14.2tonginx:1.16.1: -

To see the rollout status, run:

Run kubectl get rs to see that the Deployment updated the Pods by creating a new ReplicaSet and scaling it up to 3 replicas, as well as scaling down the old ReplicaSet to 0 replicas.

Running get pods should now show only the new Pods:

Scaling a Deployment

You can scale a Deployment by using the following command:

Rolling Back a Deployment

-

Suppose that you made a typo while updating the Deployment, by putting the image name as

nginx:1.161instead ofnginx:1.16.1: -

The rollout gets stuck. You can verify it by checking the rollout status:

Deleting a Deployment

Deleting a Deployment will also delete its managed ReplicaSet and all associated Pods.

Managing a Deployment by using web console

Viewing a Deployment

You can view a deployment to get information of your application.

- Container Platform, and navigate to Workloads > Deployments.

- Locate the Deployment you wish to view.

- Click the deployment name to see the Details, Topology, Logs, Events, Monitoring, etc.

Updating a Deployment

- Container Platform, and navigate to Workloads > Deployments.

- Locate the Deployment you wish to update.

- In the Actions drop-down menu, select Update to view the Edit Deployment page.

Deleting a Deployment

- Container Platform, and navigate to Workloads > Deployments.

- Locate the Deployment you wish to delete.

- In the Actions drop-down menu, Click the Delete button in the operations column and confirm.

Troubleshooting by using CLI

When a Deployment encounters issues, here are some common troubleshooting methods.

Check Deployment status

Check ReplicaSet status

Check Pod status

View Logs

Enter Pod for debugging

Check Health configuration

Ensure livenessProbe and readinessProbe are correctly configured, and your application's health check endpoints are responding properly. Troubleshooting probe failures

Check Resource Limits

Ensure container resource requests and limits are reasonable and that containers are not being killed due to insufficient resources.